3D: Is it just an overworked prefix latched to nearly every aspect of our lives? Or is there more depth to the phenomenon? What does this exciting, intriguing, inspiring, and trendy term really hold for our future? To explore beneath the hype, we sought insights from four 3D experts: a futurist, an imaging innovator, a developer of 3D printing and manufacturing solutions, and a practitioner of 3D geodesign.

Recent events underscore the almost universal acceptance of and dependence on 3D in our everyday lives. One is the appearance of 3D lidar images in news reports about some of last year’s tragic landslides; another is the 3D printing of human skull elements for reconstructive surgery. Current news items about uses for 3D range from the amusing to the profound and inspiring.

The key takeaway from our interviews with experts is that while 3D—and many of the innovations, solutions and implementations thereof—is not necessarily new to developers and practitioners, this seemingly recent revolution might be more about the rest of us finally being ready for it.

Futurist – Jordan Brandt

Futurist – Jordan Brandt

Q: Your background is in architecture. The AEC (architecture, engineering, and construction) fields are somewhat “macro” in scale and not always thought of as leading the tech wave. How did your AEC roots lead to a role as a futurist?

JB: AEC for me has always been a blend of building and manufacturing: aerospace and [other industries]. If I’m in one too long I need to get back and gain perspective and immerse myself in the other.

And that’s what’s been fun about this role as a futurist. I came into the company through an acquisition of an AEC collaboration technology, and in becoming the futurist focusing first on manufacturing, I’m able to take a step out of AEC and gain perspective on what else is happening around the world and in other industries.

Now one of the big initiatives is to look ahead at how we will be designing and manufacturing things in 10 to 20 years and looking at this convergence—AEC and manufacturing—holistically. Here at Autodesk, we see this convergence between the disciplines of the industries. If you think of architecture in building and the number of prefab components, what is the real dichotomy between that and traditional manufacturing, and how do we accelerate this convergence?

Why now? Why is 3D viewed as a new or renewed “boom”? What is different this time?

It’s putting 3D in the hands of more people. Think of the gaming generation: they grew up with 3D. Also consider that at the age of five months we’re able to perceive 3D. Everyone has a GPS device on their phone; everyone has Google immersive view; professionals in the field now have tablets instead of drawings so they can walk through a building or infrastructure project and experience a thing in real 3D.

You could also ask, “Why has it taken this long for 3D to happen?” I think (from a technology firm’s point of view and from Autodesk who has been developing 3D for over 30 years), it is putting 3D in the hands of more people. People now have so many devices in which they can stream 3D information; it has become absolutely ubiquitous.

With Recap and Spark, Autodesk has jumped squarely into positioning and hardware. That is a pretty big jump from design software.

We even have tools in 360 for construction layout, right on your tablet. Our CEO [Carl Bass] has often said that, “When people design something, they typically do it with the intention of building something.” All of this is something that has been in the works for some time, this pulling from physical reality into the digital environment and then pushing from digital environment into a manufactured reality.

In terms of reality computing, we see this as all being part of the same ecosystem. It is very difficult to innovate in one of these areas without innovating in all of them now. We also have convergence of the hardware and software in these systems, and they have to interoperate. So, for us to be able to have the best design and construction software out there we have integration with these hardware systems.

What do you think the next decade holds for AEC, and are there key opportunities we might be overlooking as AEC professionals and industries?

I think AEC needs to look hard at investment in manufacturing and production technology; they often wait for production innovation to happen in other industries and then adopt it. The problem is that there is unique challenge around scale and efficiency. It’s very hard to think of large skyscrapers that are around us as being manufactured objects, but that’s absolutely where it is going. AEC has to invest in that holistically. We are not just going to be able to borrow the technologies we need for this future—there needs to be more native R&D.

The next decade will see a further conflation of hardware and software—it’s going to be very hard to separate the two. A lot more artificial intelligence will be embedded. Think about all of the data from GIS, resources, manufacturing data, from the production data of buildings. We’re amassing this in large quantities that’s being accelerated by cloud computing, so we are now able to centrally compute and process this information. How do we use that?

The only way for this data to be managed and actionable is to develop some method or notion of artificial intelligence and compute that in a meaningful way to be able to make decisions based upon it. I think that in the next 10 years we’ll see machine intelligence become pervasive in all kinds of computing in the built environment.

Early agriculture freed folks to do other things like art and science. Forty years ago lasers reduced the size of survey crews and machine control reduced the need for folks setting construction stakes. This next jump will automate even more. How will people be able to create value from this freed-up time?

People are going to form a much more collaborative relationship with the machines that they are using: software, hardware. Hopefully with that free time, once many of the mundane tasks are taken care of, they can invest in R&D. We can think about ways to actually make these technologies more productive—that might be my personal bias a bit as I’d like to see more R&D in [these areas]. If you are freeing up people who have very astute thinking about measurement and mathematics, and suddenly they have more time to think about how something is designed and measured and built, I’m pretty optimistic about that future.

Part of being collaborative with the machine is also being able to understand how the machine derives its answers. That does not mean that you have  to be able to do all of the computations, especially now with such computation being increasingly advanced. But I think that part of having time to understand better how the machines operate and building better collaborative capabilities […] is better understanding your friend, your collaborator, how they tick, whether that is a human or a device or software.

to be able to do all of the computations, especially now with such computation being increasingly advanced. But I think that part of having time to understand better how the machines operate and building better collaborative capabilities […] is better understanding your friend, your collaborator, how they tick, whether that is a human or a device or software.

So many cool or simply amusing things get tweeted about when it comes to 3D, the “Look what thing we 3D printed” or “look at what statue we scanned.” What’s an example of reality computing with meaningful impact that we don’t hear about in the mainstream?

An example is automating a lot of the benign tasks that have to be done on a jobsite. Billing—what has been installed and who gets paid for it—is a great use of reality computing to automate that process so that somebody does not have to go out with a checklist and say that has been installed and that has not been installed so all of those people can get paid. We really do not have to have humans doing that kind of task; that individual could be doing something much more productive.

I think there are millions of tasks on construction sites that need this level of automation. We developed ReCap as a vehicle to get there. Can we efficiently and effectively process reality data and interface that with design intent? Perhaps that is a start, and it will eventually yield new methods of fabrication.

On the 3D printing side, what is a compelling example of augmenting existing process with 3D printing that one might not hear much about?

Computational materials are one of the most interesting areas in 3D printing, which is enabling us to do something we couldn’t do before. The first adoption cycle of any new technology is to do what you did before, which is why you see so many tchotchkes [trinkets] being printed. It’s cool, it’s new, it’s novel, but it is not really pushing the bar on technology or the future of society.

The idea around computational materials is that you are actually designing the material content along with the [geometry of the] part, and you are doing this at a scale that impacts the macro behaviour of the component. And you are able to design materials that never existed before and that exhibit properties that sometimes have not been perceived in materials since the big bang.

We’re in a mass data capture era, with highly prolific data capture and remote sensing devices everywhere with input from people and their devices, actively and passively. Do you think we will end up with more data than we’re ready for right now?

I think we have more data than humans can consume, but that’s why we need to develop the machine intelligence to be able to process the data and give us effective and useful—actionable—information. Obviously, the amount of data that we’re going to consume is going to increase exponentially, and the only feasible method is to invest in artificial intelligence to help make some of those decisions, to decide what is valuable, what [parts] we need to know to make a human decision and plot a path forward.

A lot for research now shows that a large quantity of data statistically derived gives you better results than a few points that we deem as precise. And there is also an inherent bias for us to think of a specific point of data as being precise. But, I think, if you look into what we do and do not know about that point we often find it does not have the validity that we used to think it did. One thing that reigns supreme—and quantum mechanics shows us this—is statistical probability. The 3D data [we are talking about] gives you a more complete picture. All of the data is in [context with the data around it], and you can [measure] with much more certainty.

Yes You Scan

Jumping into scanning has always seemed a bit daunting with the cost, the premium on processing, and learning the software. That has all changed in a few short years. For example, Faro and Autodesk have collaborated on a package designed for folks wanting to scan and process on a short-term basis without a major financial commitment (under $2,500) with their “Yes You Scan” package.

Reality Capture – Dominique Pouliquen

Reality Capture – Dominique Pouliquen

The concept of bringing the fruits of all the varied imaging, scanning, and remote sensing technologies into easily viewed, managed, and analyzed geo datasets for planning and design has been implemented in many ways. For Autodesk, reality capture has been realized in a growing set of tools and solutions under a product line called ReCap. Available for years with elements productized for the desktop as well as online, ReCap is now hitting its stride.

Dominique Pouliquen, a well-known speaker, advocate for, and developer of 3D solutions, brought his extensive experience in imaging and visualization (he was CEO and founder of Realviz) to Autodesk and is their director of marketing and market development for reality solutions. We asked Pouliquen about what this concept of reality capture means in practical terms.

Q: The ReCap product line appears, at this time, to be grouped into three lines: ReCap Pro (desktop) and two cloud-based services, Real View on ReCap 360 and Photo on ReCap. How does each fit into the reality capture concept?

DP: ReCap Pro for the desktop serves as a bridge between the devices capturing the data, like scanners, and the design and analysis environments. You can register scans, clean-up, segment, do regioning, and produce photo realistic clouds. But the key is that we do this quite “hardware agnostic.” There are a few exceptions where you may need to use some part of a proprietary software connected to a type of hardware simply for export, but generally for all of other tasks [I’ve noted] this can all be done inside ReCap.

The native exchange format for ReCap is .rcp, and this can be open inside design software like AutoCAD, Civil 3D, Revit, and InfoWorks [standard in 2014 versions forward]. There is a nice point cloud engine, full snap and segment, etc., and we are continually adding more functionality, for instance for automated feature recognition.

Real View on ReCap 360 is a cloud service where you can publish these 3D reality views for all of your collaborators and stakeholders to access. The corresponding pano scans in private space where they can view, measure, and annotate. Photo on ReCap 360 is the same for imaging and close-range photogrammetry. Users can ask for a 3D model, a photobase mesh, or .rcp file of a photobase point cloud.

What is the underlying concept or even philosophy behind this model for what solutions have been developed for ReCap? Are there specific advantages?

What is the underlying concept or even philosophy behind this model for what solutions have been developed for ReCap? Are there specific advantages?

Users, companies, public agencies—they have a lot of different equipment for capturing data, and these are appropriate for different types of work. But there can be struggles in working with many proprietary lines of software to process and publish the data for design. With ReCap they can do the processing in an environment that is closer to what they are used to in their design software.

In our opinion, our cloud-to-cloud registration is very good—robust—and we have a very good way to report the accuracy of targets and registration. This is something we recognized early on as a priority for a lot of users.

Scans and photos are the top types of input that one might think of. Are there other forms of input that ReCap handles?

Yes. Part of the concept of reality capture is to have a composite of everything in the design environment: on the ground, under the ground, above and under water. Sonar is handled in a similar manner to scans, not yet registered directly to [terrestrial] scans, but with the same [coordinate base/reference framework] it can be in the same design environment. We are working on combined registration. We are also working on how to manage ground penetrating radar (GPR) in the same manner.

Like a lot of our readers, I have “Paleo-CAD” roots, starting with early versions of CAD and GIS software. The new world of 3D, scanning, close-range photogrammetry, and related software seems intimidating. How is the adoption of this new wave of 3D software like ReCap going?

For users new to their respective industries, 3D and the capture devices are expected and accepted as the norm. Many users do not realize yet that getting involved is not as daunting as they might think. They do not realize that there is this wonderful, new point cloud engine and that they can have the same interactive experience as in their AutoCAD platform.

Ready (Finally) for 3D – Elliot Hartley

Ready (Finally) for 3D – Elliot Hartley

How do current geo and engineering design practitioners view this “3D revolution”? Many practitioners were all-in on 3D long before it became trendy for the masses. We asked for insights from noted GIS/CAD/geodesign expert, lecturer, and GeoPlanIT blogger Elliot Hartley, director of Garsdale Design Limited in the UK.

Hartley specializes in planning and urban development, and visualization and will employ any and all tools appropriate for his domestic and international projects, including but not limited to City Engine, ArcGIS, AutoCAD, and SketchUp.

Q: What is different about the 3D of today over what GIS/CAD/design folks have had at their disposal for decades? What truly characterizes this “born-again-3D” era?

EH: This “born again” 3D we’re experiencing now has little to do with the professionals using it but more about who is consuming this 3D data. The standard office and home PC has only recently been able to do 3D easily without specialist hardware. It’s consumer hardware, I think, that has given 3D its time in the sun, properly this time. Think about it: the phone in your pocket can run 3D games and mobile devices can view WebGL. [That’s a Java-based library with roots in open source for rendering 3D in browsers without a plugin, popularly implemented by geospatial software developers.] The science, software, and specialist hardware have been around for ages to do various 3D analysis and work. It is the consumer/generic office hardware market that has shifted 3D, not the specialist.

I think there are two main topics in 3D from my perspective. [One is authoring 3D content.] The big players have all had 3D modelers and analytical tools for some time. Autodesk, Esri, and others like Trimble (with SketchUp) have 3D authoring tools as well as the analytical side of things, too. Niche tools [that many advanced users know about] are established in certain industries, and you’ve needed sometimes-specialized hardware to use them or they have been confined to specialists. All of this has required technical/scientific knowledge of things like projections and accuracy, but basically the science behind it is well established.

As hardware has become more capable and powerful, the complicated analysis is now easier to do then it ever was (just drag this and click here!). This is because the software has gotten more powerful and has taken away a lot of the knowledge requirements of the user. No command line tools are required for most users now.

[Another main topic in 3D is viewing and analyzing 3D models.] While 3D can be handled by most entry-level PCs now, there are still performance issues in viewing 3D when it comes to your PC’s memory and processing power. If you want to view a massive 3D city model (with or without textures), you’re going to need some kind of specialized PC or a streaming server. Look at the established players like Autodesk with Infraworks or Esri with their upcoming streaming globe (based on WebGL). Then look at some interesting, smaller company offerings like the former Google X project called Flux (flux.io), or Agency9, or even ViziCities. Everyone has sensed an opportunity in the 3D market, and there is a gap for smaller players to come up as well as challenges in moving giant corporations forward.

3D seems to be coming at us from all directions. Will this lead to confusion and/or eventual constructive confluence?

Obviously, when talking about 3D, there are many industries: architects, planners, GIS professionals, retailers, and gamers all come from different disciplines, but these are slowly merging in places. Architects have always wanted nice, 3D graphic renders; planners have started to do the same at a different scale; now GIS professionals are looking to visualize their data in different ways. Of course gamers want increasingly photo-realistic-looking games, and now architects and planners are using their technologies. For example, look at game engines like Unity, Unreal Engine, and CryEngine—all are actively being used in these professions.

This merging of disciplines is very much where this idea of geodesign has come from, a more integrated approach to design that joins interlinked disciplines.

One fear about the advent of such simple-to-use and readily accessible software and geo data is that we may end up with far too many ill-informed “button pushers.”

One fear about the advent of such simple-to-use and readily accessible software and geo data is that we may end up with far too many ill-informed “button pushers.”

The real problem on the horizon, I think, is on the technical side of things. Consumers (i.e. everyone else) will care little for details of accuracy and scale, yet for real decision makers it is crucial. I can make a 3D city model for you today, but would I advise you to make all your decisions based on this 3D model, especially in the sub-meter level?

3D is coming. Cities are buying 3D city data and creating their own data, but these government agencies will be using it at a variety of scales. For example, visualizing the authority’s assets (trees, lamps, etc.) or looking at revised master plans for a derelict site (policy) to actually informing decision-making on such things as new residential apartment blocks (development control). These are all great uses of 3D and very useful, but as with most things, context is important!

Accuracy and precision: these are concerns that engineers and surveyors have with the idea of this newly branded “geodesign for all” movement (or is it simply engineering design in a new package?).

Architects and planners, for the most part, operate at different scales and therefore require different levels of accuracy. The real fight will be for where these scales and accuracy issues meet and how software will deal with it. Think BIM [Building Information Modeling], then think how an urban plan might work with that data [inches, centimeters, even millimetres], and then try and use the data in the context that a GIS professional on a regional scale might use it [feet, meters, tens of meters, kilometres, miles].

Where do we draw the line? Where does GIS meet BIM meet CIM [City Information Model]? Keeping people cognizant of the respective requirements for projections, datum, precision, and accuracy will be of utmost importance.

Fixing the Broken Chain – Aubrey Cattell

Fixing the Broken Chain – Aubrey Cattell

There is far more to the 3D printing movement than the hype. As Jordan Brandt notes, there is a conflation between hardware and software and disciplines. What appears, from the outside, to be a fad is having a tremendous positive impact on not only manufacturing and materials science but also on AEC.

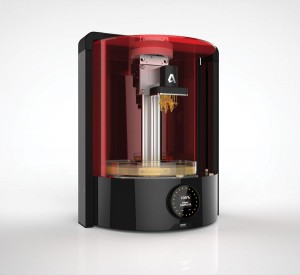

Autodesk has ignited an initiative, product line, and collaborative community called Spark that seeks to bridge these rapidly intersecting worlds and is confounding legacy conventional wisdom by doing so in an open source manner. Aubrey Cattell, Autodesk’s senior director of business development and operations, shared some of his insights about Spark, his passion for 3D, and the promise it holds (see the video of the full interview online at xyHt.com).

Why has Autodesk suddenly jumped into not only the positioning area, with solutions like ReCap, but now such things as 3D printing?

AC: While our mission is to help folks imagine, design, and create a better world, for 30 years we’ve really been focused on the design and imagining elements. But in listening to our customers who are seeing the hardware and software elements converging, [we heard] they wanted us to forward integrate into the create piece, to help them build things, manufacture things.

We are excited about the promise of something like 3D printing. At the same time, like a lot of the industry, we had been frustrated with the results early on. There was this broken tool chain: if you wanted to 3D print something you have to start with a 3D model, it has to be the right kind of model, it has to have the right properties, you have to check it, you have to be able to seal it to be watertight, you have to be able to adaptively thicken it or hollow out parts so you don’t spend too much on materials, create support structures, optimize tool paths—lots of design considerations. Then you need to be able to send the design code to a variety of types of printers using a variety of different types of materials, and that all lends itself to a process that can have multiple points of failure: points of failure that lead to inefficiency and simple failure of the finished products.

So that was idea behind Spark, to fix this broken chain. We said, if we are really going to connect hardware to software, to bridge the gap between digital content and actual physical matter, we really needed to create an open platform that others can build on, because we know how to build software, and we know how to solve some of these fundamental problems.

Think of the example of PostScript [a computer language for creating vector graphics], which sort of became the lingua franca for 2D printing and replaced a lot of the other solutions out there that were not really working well together. That helped 2D printing to really take off. We can do this for 3D.

How might this boom in 3D printing innovation help the AEC industries?

How might this boom in 3D printing innovation help the AEC industries?

In the AEC world you can see the influence of 3D models in machine control, the design on a chip you can put on a grader for heavy construction. What we see from our customers is that the industries are converging. In so many elements of building and construction—there is even 3D printing of concrete—[there’s] the ability to 3D print complex latticework, incredibly strong and light, using very little materials. There is all manner of data input that can be entered into the design of what you want to build, that can inform all levels of what goes into the materials and characteristics of the pre-engineered elements of construction and building.

But you also jumped into the hardware arena, actually building a 3D printer.

We wanted a way to drive adoption across the industry, and that is really important to us because we want to spur innovation, new use cases, new applications, breakthroughs in materials, new hardware approaches. In order to show the way for our platform, we needed to walk the talk ourselves and show what an integrated software and hardware experience could look like, and that is the rationale behind building our own printer. Like when Google introduced Android, they built the Nexus One smart phone that could demonstrate the integrated features of the OS.

Open is a big part of what we are trying to do with Spark, and it works on the three levels, open software, extensible API layer (the design for our machine is publicly available under a permissive license so that others can use that as a starting point for their own innovation), and an open approach to materials. A lot of 3D printing manufacturers have a closed approach to materials, like with ink-jet cartridges. We want to open it up so that others can create materials formulations that will work with our printer.