A unique pro bono collaboration creates the first-ever integration of multiple technologies for mapping a historic monument.

The battleship USS Arizona was bombed during the Japanese attack on Pearl Harbor on December 7, 1941. The ship exploded and sank, killing 1,177 officers and crew. It now lies at the bottom of the harbor.

In 1961, Elvis Presley performed a concert attended by more than 4,000 people to raise funds for the USS Arizona Memorial, to be dedicated to all who died during the attack. More than $65,000 was raised, and Elvis stipulated that every penny go to the memorial fundraising effort. This was the beginning of a relationship between the USS Arizona caretakers and numerous private companies.

An aerial view of the USS Arizona Memorial with a US Navy Tour Boat, USS Arizona Memorial Detachment, moored at the pier as visitors disembark. Credit: JAYME PASTORIC, USN

In 2014, more than 1.7 million individuals visited the Pearl Harbor visitor center, making it the most popular visitor destination in Hawaii. They are brought by boat to the white memorial building that straddles the ship’s final resting place. A plaque in the center shows a picture from before the attack and another from after.

Creating a Model

“Thirty years ago, some amazing hand-renderings of the USS Arizona were produced,” says Peter Kelsey, strategic projects executive with Autodesk. The National Park Service (NPS) describes the process of getting those measurements in the early 1980s: with visibility of six to twelve feet, about half a mile of string was laid over the ship to establish straight lines where none existed. The 608-foot ship was a mass of twisted and curved metal.

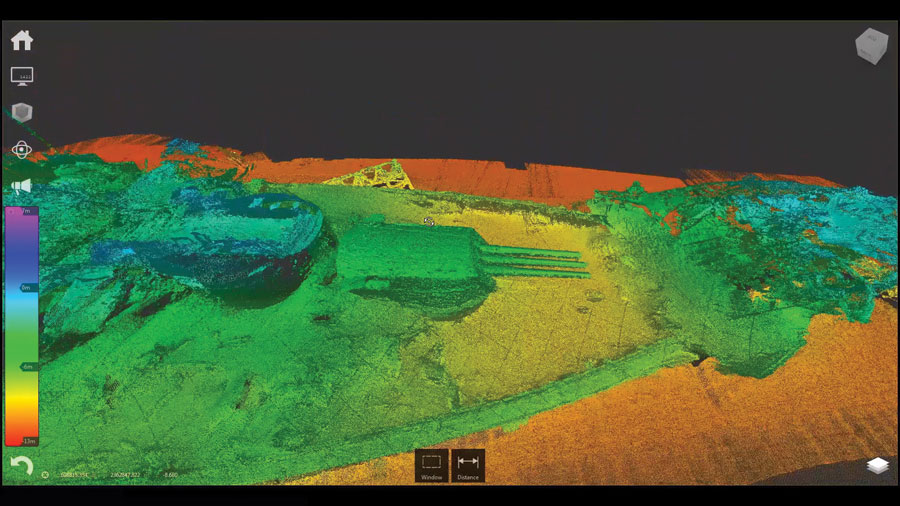

For this 3D project, Daniel Martinez, chief historian at the USS Arizona Memorial, says, “The goal was to combine lidar, sonar, aerial imaging, and existing photography and surveys to produce a survey-grade 3D model of the USS Arizona.”

In 2014, Kelsey approached potential partnering firms to create this first-ever test of integrating multiple sensors on the surface and under water and with existing surveys and aerial photographs. At the same time, Scott Pawlowski of the NPS approached the US Navy and US Coast Guard to see if they were interested in using this project for training. “This was a stellar opportunity. This project pushed the limits of technology and resulted in totally new boundaries,” says Pawlowski. “There were many big successes and, of course, a few small failures.”

The Teams

The Deep Ocean Engineering USVH-1750 heads under the memorial to scan where the Boston Whaler couldn’t.

Autodesk was one of many companies involved in gathering the 3D data. R2Sonic, eTrac Inc, 3D at Depth, HDR Inc., Deep Ocean Engineering, and several other companies donated time, equipment, and expertise on this venture without compensation. Combining multiple technologies for the first time in this project took an incredible amount of coordination and cooperation. Integrating the individual aspects to create the point cloud was a first.

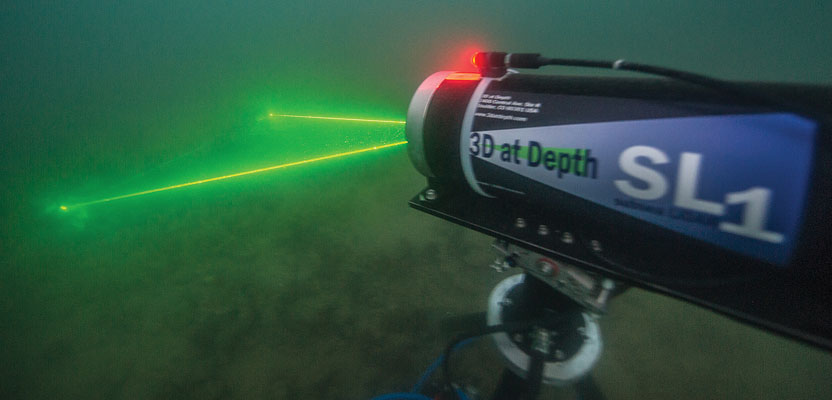

The project involved terrestrial laser scanning (provided by Sam Hirota, Inc. and Gilbane Building Co.), multibeam sidescanning sonar (provided by Oceanic Imaging Consultants, Inc.), subsea lidar and diver-portable sonar (provided by 3D At Depth and Shark Marine Technologies, Inc.), and photogrammetry (Autodesk and the NPS).

Data Stream Integration

At first, “the group was ready to get started with the lidar, sonar, and multispectral data collection. However, the first job was to get everyone to agree on the survey reference point for the work. For that we were directed to the benchmark at the visitor center managed by the National Park Service,” says Mike Mueller of eTrac.

Hydrographic applications are more difficult than land or aerial. At the time of this project, most unmanned surface vehicles didn’t use multi-beam equipment because of limited battery power and durability. Surveying from the surface has its own challenges because of the motion of the survey vessel. Deep Ocean Engineering’s vessel was useful because it could navigate under the bridge of the memorial, but it lost the GPS fix there, so inertial data was used for georeferencing those locations.

All the data points are tied together because everyone used this same reference point. The R2Sonic multibeam echosounders were able to generate distance measurements with beams as narrow as 0.03 degrees. “This is the first time ultra-high-resolution systems have been used in the blue water environment like this. This is a great application using sonar with a macro facility just like you use a macro lens on a camera,” says Jens Steenstrup of R2Sonic.

3D view of the forward section of the USS Arizona, combining a point cloud of multiple data sources for a never-before-seen view.

eTrac, Inc. helped fine-tune the positioning for the shallow and deeper water sonar datasets.

These datasets were merged by Autodesk, resulting in a centimeter-accurate sonar point cloud that ties in with the millimeter-accurate lidar dataset.

The result of the effort is two-fold. First was the personal satisfaction of helping create a baseline to monitor the condition of the USS Arizona. The technical result is a clear demonstration of the ability to integrate multiple data streams to create a point-cloud of incredible accuracy in a very difficult environment. “Now,” says Kelsey, “we are able to create full 3D models for analysis, simulation, and visualization for education purposes.”

One potential outcome of this integration of data streams is in automation. “We see making complicated systems easy to use. We could have any vessel such as a freighter be a survey vessel,” says Steenstrup.

“Technology integration is the future, not just in the underwater world, but in all surveying,” says Mueller. “We didn’t know where this project was going to lead us, but the survey of the USS Arizona was the perfect forum for disparate companies to work together.

“When you consider what was done, this was the perfect environment to test the technology completely. We now have a survey-grade point cloud of the USS Arizona. We hope to be able to replicate the survey every five years or so and see how the USS Arizona is withstanding its environment.”

Underwater lidar scans at night on the USS Arizona; Shaan Hurley of Autodesk photographed the scanner, which was provided by 3D at Depth.

From an interpretive perspective, a broader view of the ship is now available. A small model of the USS Arizona is on display, but it is not accurate from Martinez’ perspective. “We hope to use 3D printing technology to make a larger, (up to six-feet-long) detailed model of the USS Arizona as she currently sits in the harbor. People throughout the world will have the opportunity to see the USS Arizona as she is today. As a side benefit, data was gathered on the USS Utah which also rests nearby on the harbor floor,” says Martinez

The computer 2D print of the USS Arizona in its current condition was presented at the December 7, 2014 commemoration.