Mobile 3D sensing devices are big news right now. The announcement of one by Google’s Advanced Technology and Projects (ATAP) group made a stir on social media and the tech blogs back in February 2014. Code-named Project Tango, this Android smartphone-like device tracks the location and pointing direction of the device and simultaneously creates a 3D model of the environment. Google released a tablet version a few months later. Both still prototypes, the devices were available only to exclusive developers, similar to the rollout of Google Glass.

Google isn’t the only one making noise. Occipital, a TechStars startup, began shipping their Structure Sensor in August 2014, a 3D sensor that attaches to an iOS device, allowing any developer the ability to create mobile applications involving 3D scanning. The Structure Sensor was launched on Kickstarter in September 2013, raised nearly $1.3M, and became the sixth most successful Kickstarter technology category project ever.

And Apple bought Prime-Sense, the 3D sensor company behind the early Microsoft Kinect gaming product, after Microsoft replaced it with its own; Intel announced its RealSense 3D camera to be released to its PC and tablet customers; and even Google’s partners in Project Tango began selling their own mobile devices for 3D scanning.

As tech media outlets announced the arrival of “3D tech” to the world, most people were inspired by the games, indoor navigation, and shopping options that these devices would enable. New buzzwords emerged such as “reality computing” and “spatial computing.”

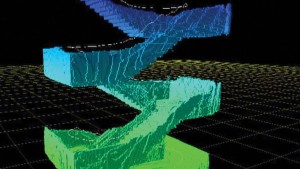

For the mapping community, the response was enthusiastic curiosity with layers of skepticism and condescendence, because point clouds, photo-realistic 3D models, and simultaneous location and mapping (SLAM) algorithms are nothing new (see xyHt’s December 2014 cover article). The geospatial industry has seen its share of handheld devices, high-end mapping carts, and YouTube videos of robotics students hacking Microsoft Kinect sensors. Instead of the media response, mapping specialists wanted to know how accurate and stable the 3D map would be.

Mapping Democratization

Yet, this moment is significant and far bigger than the discussion of accuracies. It meant that mapping technologies have hit a bigger stage. Following the path of many technologies that started in the research labs of academia and the military, they’ve progressed to the realm of professionals and specialists and finally entered the homes and offices of mainstream users.

In other words, the release of mobile 3D scanning devices marked the start of the democratization of mapping. Making accurate maps is suddenly affordable, easy, and accessible to the masses. Here’s why.

3D sensing mobile devices take the form and function of familiar mobile devices, which means they are portable, handheld, and running familiar operating systems in iOS, Android, and Windows Mobile.

Most are available for about $1,000-$2,000 USD, which makes them extremely affordable.

They map the one place that really matters to most people: indoor spaces. Indoor mapping has been “the next frontier” because people spend more than 80% of their time inside; however, relevant indoor maps have been hard to come by because the available tools were too expensive, require specialists to operate, and were time-consuming to map frequently. None of this is the case any more.

These mobile 3D sensing devices will transform the industry. They enable indoor mapping due to their sensing capability combined with all the benefits of using a smartphone: powerful computer processing, instantaneous communication, access to the internet, great user interface, an ecosystem of applications, portability, and a huge number of developers who can make use of these capabilities.

As a result, 3D sensing mobile devices will enable a higher level of automation that supersedes current mapping practices. No longer will users need to transfer data from a device to a processing software package, then hand off results to another software package to get the information they want. Plus they don’t want a data dump of 3D data and they don’t have the intention or expertise of processing a point cloud or aligning camera frames. They simply want their problem solved really fast in one giant step, and they don’t care how it’s done.

Future workflows will perform three steps automatically and rapidly: acquire data, process data, and make use of data to solve a human problem with minimal interaction.

Although the geospatial results from mobile 3D sensing devices may not be accurate enough for survey applications for the moment, sensors and systems will improve and eventually approach survey grade with time. Yet, the most important change is the transformation of geospatial workflows that focus on solving an unprecedented scale of human problems, completely automatic.

Maybe that’s why new category names such as “reality computing” or “spatial computing” are required. People want something grander and much bigger to describe the changes that are happening in mapping. The convergence of spatial, mobile, and robotic technologies are unleashing unforeseen applications for mainstream users, providing an unprecedented amount of personal spatial awareness. The world will witness new possibilities beyond the imagination. These are very exciting times.