Above: An autonomous farm tractor drives on a demonstration course at Trimble Dimensions. In addition to following predefined paths and instructions, the on-board tractor systems can sense and react to obstacles.

Trimble launches a new solution for construction project management– and takes a futuristic leap into autonomous construction vehicles.

by Matteo Luccio and John Stenmark

Editor’s note: What innovations in technology and operational solutions will have a strong impact on the construction sector? We’ve been asking key AEC solution providers and their customers what elements of new tech could make the biggest difference for their enterprises.

A lot of familiar terms represent innovation and disruptive technologies: the cloud, apps, mobile data, big data, and autonomous vehicles. Construction is not shying away from such solutions. At the recent Trimble Dimensions 2016 user conference and exhibition, we were treated to demonstrations of their new project management suite (ProjectSight) and their new autonomous vehicle systems.

Project Site

The proliferation of mobile apps for the construction industry is improving the productivity of each trade but creating information “silos” that can cripple project management. To reconcile the competing desires for ease of use and for a single point of access to all the information, Trimble has created a cloud-based mobile collaboration platform called the Trimble ProjectSight system.

Launched at the Trimble 2016 Dimensions conference, it enables teams to collaborate and to share plans and building models. It is “a singular source of truth that all stakeholders can share and contribute to,” says Marcel Broekmaat, market manager for Trimble Buildings GC/CM.

The Problem

“Not long ago, the Project Controls team was almost always at the center of the action,” Broekmaat explains. “Many of the largest contractors frequently rely on sophisticated enterprise integrations to automate everything from HR to AR, optimizing workflows using the same sources of data.” In this environment, they would also share plan sets, work orders and formal documentation.

If there is a downside to highly flexible and integrated systems, it might be in the complexity perceived at the user level.

Over the past five years or so, however, the way that general contractors manage building projects has shifted remarkably, Broekmaat says. Now, suddenly, there are apps for every task, such as keeping track of punch-list items or distributing drawings, and they have been rapidly adopted by people in the field. Each trade on a worksite is potentially using its own method of managing work.

Anyone who’s visited a large construction setting can appreciate the potential for confusion when there is a question about a certain design detail.

“The problem,” Broekmaat says, “is that you create silos that are difficult to tap into if you need to answer questions if you are not a user of that app. For example, a supervisor or a project manager in a meeting in the trailer doesn’t know where to look for the latest set of information.”

“At the end of the month, project managers typically find themselves wading through email, text messages, formal requests for information, checklists, safety warnings, etc., just to explain billing and scheduling discrepancies.”

Another continual challenge to collaboration, whether between office staff and field staff or between contractors and subcontractors, Broekmaat explains, is context. “Anyone who’s visited a large construction setting can appreciate the potential for confusion when there is a question about a certain design detail,” he says. “Whether it’s ductwork, wall layout, electrical, or glass, teams need to have a shared understanding of exactly where the issue lies on site.”

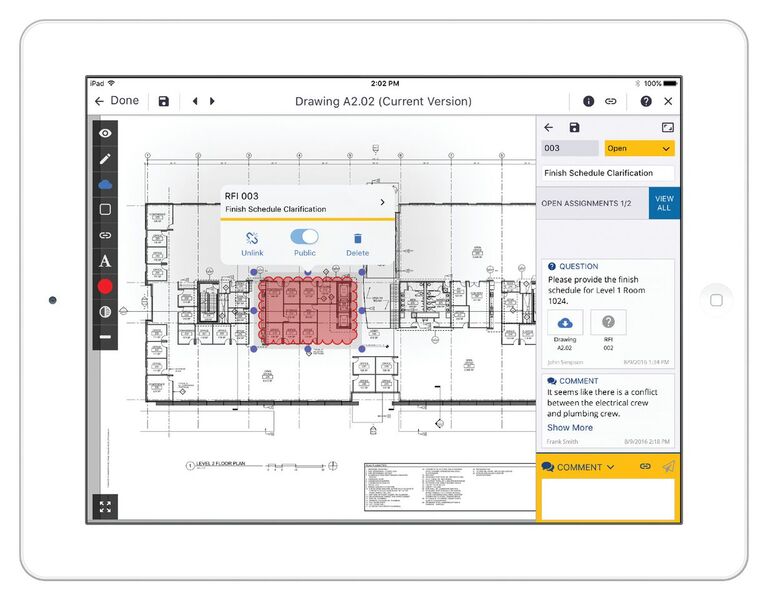

ProjectSight makes the official plans and models the shared medium for collaboration, he argues. “Markups, notes, photos, and documents can be affixed directly on the plans and be accessible by everyone that needs them.”

Origins

Origins

Broekmaat was trained as a structural engineer and in construction project management. He started his career at a construction firm in The Netherlands, then joined a company called Graphisoft in Hungary, maker of ARCHICAD, as product manager for Constructor, the first integrated virtual design and construction solution.

The team that built that product was then spun out as Vico Software, and Broekmaat was one of its cofounders. It built Vico Office, an integrated solution for BIM-based cost planning and schedule planning.

In 2012, Trimble acquired Vico Software, and Broekmaat took on the role of market segment manager for Trimble GC/CM, responsible for the project controls product line, which now includes the ProjectSight system.

“It came out of a long history with project management,” he says. It was developed by the same team that devoloped the industry-standard Prolog project management solution. “We’ve come up with a new solution for project management that melds project communications with plan management and documentation in the design context.”

One of the roles of ProjectSight is as a cloud hub for construction data, drawings, documents, and collaboration. Here, team members are communicating via comments and markup tools about the finish schedule for a specific room. Teams access one source (in the cloud and in real time) for construction documents and can add comments, images, and files to their communications. An advantage is that all transactions are logged and recorded in one place.

Not CAD or BIM

ProjectSight is not a CAD system and not a BIM authoring system, Broekmaat says, although it uses information from all of them.

“So, if your project team has produced drawings using a CAD system, then the CAD drawings can be brought in as PDFs to provide the context for project management data. Without the context, it is just a list of data. If you link that to mark-up in a drawing, then it becomes information that is easier to digest.”

“The same thing is true for the BIM information: if you have SketchUp or Revit models, you can bring them into the Trimble Connect collaboration platform, and ProjectSight can then tap into that to provide the spatial context.”

Surveyors, too, can become ProjectSight collaborators with specific issues or tasks assigned to them on the job site, such as new drawings or an area that requires special attention. However, the system is not designed for them to store surveying measurements. To do that, they can use Connect, which integrates with the surveying software that talks to Trimble surveying equipment. The layout is also not planned from ProjectSight.

“If there is a question that a surveyor needs to answer, we can log it in ProjectSight and assign it to a surveyor,” says Broekmaat. “Then, everybody in the project can see the surveyor’s answer so that there is no need to write several email messages or to post documents in various places.”

Vice versa, the surveyor may use the system to post a question that somebody else in the organization may be able to answer.

Other vendors provide solutions focused on distributing and marking up drawings. “Those are great tools, too, but they lack the ability to store the project controls data in a database and do not allow you to configure the security features to specify who has access to the data,” says Broekmaat.

“Our solution has the full back end of a project control system, which means that you can specify every user’s level of access to the system.” Additionally, he points out, ProjectSight has built-in support for BIM.

Users of Trimble Connect can create views, then link to them directly from a ProjectSight project.

“Typically,” Broekmaat explains, “the overall project consists of tens of models. You never need all of them.”

For example, if you need five models to review a floor–such as the technical model, the structural model, and the architectural model–you can store them in Trimble Connect and then access them directly from ProjectSight.

“If you have a punch item, a work directive, or a safety issue, you can put its description into the record in ProjectSight and then link it directly to the 3D model view. When you click on it, that view loads inside ProjectSight. So, you can access directly all five models that you need in order to say something about that safety issue.”

Interview:

An Interview with Trimble’s Autonomous Technologies Guru By John Stenmark

Dr. Ulrich Vollath is the director of autonomous technologies at Trimble. He studied physics and computer science and holds a PhD in computer science from the Technical University of Munich. Vollath joined Trimble in 1993 as a research and development engineer for GNSS software.

At the Trimble Dimensions User Conference in November 2016, Vollath demonstrated some of the autonomous capabilities he and his team have been working on. We interviewed Vollath to receive an update and more details on the progress and state of autonomous technology.

xyHt: Please provide an overview of Trimble’s approach and philosophy to technologies that support autonomous operations.

Vollath: Trimble has more than 20 years of experience in machine control, guidance, and semi-autonomous operation. It began in the 1990s with bulldozers in construction and tractors on the agricultural side.

The approach we have taken so far follows the progressive addition of new and mature technology. Our approach is similar to what is taking place in the car industry where you have a driver-assistance system that steadily becomes smarter and smarter. At some point the system can take over the full machine.

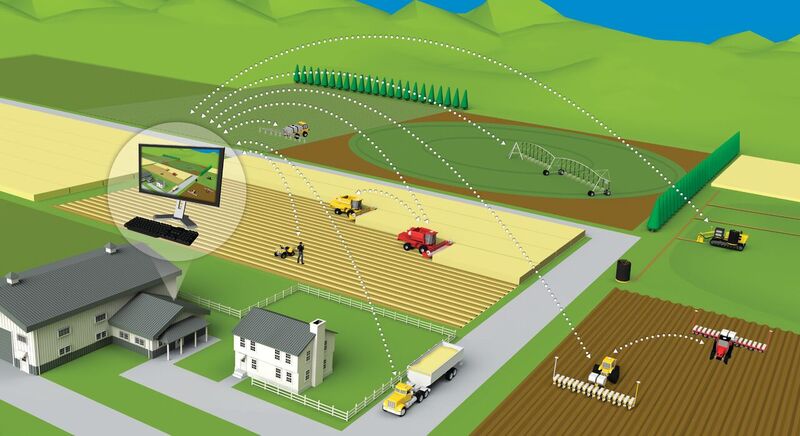

A good example is the autonomous tractor we demonstrated at our most recent Trimble Dimensions. The solution was developed using technologies generally available to customers today, with the addition of some newer technologies we developed, including obstacle detection to sense if a person or other obstacle is in the path of the tractor. It also included a module that handles the automated speed control, which we had so far done manually on tractors.

That’s also where we’re headed in the future: adding more intelligence for the machine’s capabilities and performance. As a result, the operator has fewer tasks to perform on the machine. So, at a certain point he or she changes from a machine operator into a supervisor.

It’s important to mention that safety is always the primary concern. We will reach a stage in the development when the system can operate with a very high level of safety. At that point, the operator could then leave, and the machine could be supervised from a remote site–or become fully autonomous. Alternatively, one driver could operate multiple machines.

xyHt: Is remote control a precursor to autonomous operation?

Vollath: Not necessarily, because remote control means operators would still have their eyes and ears on the machine. As an example, they could be in the office watching through the camera on the front of the machine while also controlling the machine via joystick: that is remote control.

Supervised autonomous means the operator would be watching the machine but typically not interfering or controlling it. The operator would assume control only if a situation gets dangerous or too complex. And outside control would be increasingly less necessary as the machine’s control software becomes smarter.

There’s also the concept of orchestrating multiple machines. One operator could control multiple machines, coordinating them to keep them from getting too close to each other. The various machines are on their designated jobs, and–much like a foreman–the operator would supervise all the operations.

It is a complex picture. The development process won’t include a product update that, poof, makes the operator no longer needed. Concerns for safety and the complexity of fully autonomous operation make that scenario unrealistic. But we are moving towards smarter machines without on-machine operators–and we demonstrated that ability at Trimble Dimensions. It’s clear that the machine is capable of doing its regular job autonomously.

A demonstration of an indoor autonomous vehicle: the device uses a digital map to navigate and can refine the map to include new or changed walls or obstacles.

xyHt: As we make the break from remote-controlled to supervised autonomous, a key milestone is that the decisions are being made by the machine itself, and the operator/supervisor is just there for the ride. Correct?

Vollath: Yes, or a remote supervisor, who could manage multiple machines, would just give high-level commands. For example, the supervisor could tell a machine to go to a certain place and create a specific grade or to deliver a load at a certain location and time. The tasks that require human operators will be at higher and higher levels.

It is important to emphasize that the intermediate step between remote control and supervised autonomy is a big one. It’s an even bigger step to go to full autonomy. Remote control is not our focus: we are looking at how and when we can get humans off the machine. At that point the operator’s job description will change to one of supervising machines that do their jobs completely autonomously. The key advantages here are safety, productivity and return on investment.

Another area to consider is the concept of the lead-and-follow system. For example, if a farm has one tractor that is guided or nominally operated by a human operator, then it’s possible to have a “team” of other tractors, combines, or trucks that simply follow that lead machine. Lead-and-follow cultivation is one of the potential intermediate steps, especially given the strong focus on safety and productivity. The main control is still by one person, but that one person’s productivity now has tripled or quadrupled.

xyHt: Several years ago the big news in manufacturing was that companies could go directly from a computer design to an automated milling machine, which could then cut the part. There was no real human interaction between design and manufacture. Is that a reasonable analogy here?

Vollath: Yes. For example, consider a construction project where the site design blends with geospatial data. Data collection will be more and more automated. The Holy Grail would be to load the design onto the machines and then have the entire construction site operation run automatically. But that is obviously still in the future.

The same concepts hold true on a farm. Farmers or agriculture consultants can design the tasks that comprise all the different jobs such as tilling, seeding, fertilization, and harvesting, with the ultimate vision of having everything take place automatically.

Autonomous vehicles can become important parts of modern farm practices. Functions, including tilling, seeding, spraying, and harvesting, can be performed using autonomous operation.

xyHt: What core technologies are in play to address these markets?

Vollath: There is an old joke about navigation: It’s not about where you are, but about where everything else is–and especially if something is where you want to go. If a machine doesn’t know what is around it, then it can’t make a decision. The situational awareness of the machine and how it perceives its surrounding environment is a primary capability. And that capability involves different sensors such as cameras, lidar, radar, and ultrasound, combined with traditional devices like GNSS and inertial navigation systems, to allow the machine to make decisions.

At Trimble Dimensions we also demonstrated an autonomous rover in an indoor environment with some walls and various obstacles. The rover navigated using an internal map, which allowed the machine to figure out where it was relative to the space. It included a risk-perception capability that enabled it to sense if someone was walking in front of the rover or if a new obstacle appeared in the work area. That is the situational awareness component and it needs to be 100% right–it is not an option to bump into something or someone.

xyHt: For positioning, that’s going to be primarily GNSS and inertial. Are there any other technologies in play there?

Vollath: There are situations outdoors where GNSS isn’t the optimal technology to use, particularly in large cities and dense forests. Users can look at additional sensors; inertial is one of them. We also are working on camera- and lidar-based sensors that can collaborate with the INS to give precise location all the time, indoors and outdoors.

Once you have those sensors in place, the technology is related to what is called simultaneous location and mapping (SLAM). SLAM is a technique where the system builds a model that enables a machine to determine its augmented position. At the same time, the system detects any obstacles in the scene and uses that information to refine the map as the machine moves.

For example, known interior walls can serve as navigational aids to augment positioning sensors, using what is there instead of a dedicated positioning infrastructure. The system could also detect obstacles such as furniture or people. It’s similar to when humans drive a car: the drivers look around and see what is there and then navigate relative to it.

xyHt: What are some of the more interesting markets and applications?

Vollath: The early opportunities for autonomous vehicle development are definitely in the agriculture arena. The main reason is that a farm field is typically empty; there are fewer people, vehicles, or other obstacles to deal with, and the surfaces are relatively uniform. So safety systems can be optimized for that environment.

The other big outdoor markets are construction and mining. These applications are more challenging because there are workers and other machines moving around, so operators will always need to avoid or take into account other objects. Within these arenas, however, mining tasks such as drilling and hauling are good candidates for autonomous developments.

We are also supporting the autonomous car industry. We have customers in the automotive autonomy field for several solutions, including our inertial technologies and the use of Trimble RTX positioning services. RTX enables users to obtain absolute positions worldwide to a few centimeters as long as they have GNSS satellite coverage, so it is a pretty powerful tool.

There are also a number of possibilities indoors where users can leverage an internal-map approach as one basis for positioning. Construction and cleaning or maintenance all have good potential. Building construction is very interesting: it ties nicely to BIM, and autonomous machines could perform certain dangerous or repetitive tasks that have high rates of worker injuries.

xyHt: What are some of the most important challenges that are ahead for autonomous operations?

Vollath: The overwhelming concern is safety. A key concept of robotics is to reduce risk to humans; autonomous machines must be very, very safe. On the business side there’s the need to prove increased productivity and good ROI. The watchwords there are less rework, more consistent quality, higher productivity, and fewer machines deployed.

xyHt: What about challenges in terms of market acceptance? Are people willing to look seriously at autonomous technologies?

Vollath: Yes, everybody is interested. The big question is initial investment and ROI. For example, today in agriculture many commodity prices are very low, which hampers investment. The bigger farms understand that precision farming helps them reach the required levels of productivity. Just like GNSS and other disruptive technologies, the markets need some good early adopters to prove to the rest of the industry that they can run their business much better using autonomy.

So, we are not yet at the point where adoption begins to grow rapidly, but that will come soon.

On the cost side, volume will drive prices down, especially in and driven by the automotive industry. To some extent that may translate into the construction, mining, and agriculture markets, but the volumes there will never match the consumer markets.

xyHt: What would you say are the trends in technology and markets that we should be looking for?

Vollath: Technology-wise, we will see ongoing improvements in sensors and processing. Performance goes up and price goes down. And with the boost in artificial intelligence (AI), we will also see more systems perceive the environment in a much more structured way. AI has become a main trend in autonomous and robotics, and it is actually growing as it matures.

On the business side, people are seeking usable products–something that a user can operate without expertise in computer science and robotics. The systems will be supervised by the people who, today, are doing the work that the machine will ultimately take on, so we need to match the language and workflows. As the acceptance goes up, the value proposition of safety, productivity, and ROI becomes very strong.