A hybrid of geospatial technologies could help autonomous vehicle mapping drive us into a safer, more cost-effective, and fuel-efficient future.

Self-driving cars are fast becoming a reality; it’s estimated that by 2020, there will be around 10 million self-driving cars on the road. Their benefits will be immense—studies indicate that self-driving cars will significantly reduce traffic deaths, road congestion, and fuel consumption.

Self-driving cars are classified as either semi-autonomous or fully autonomous. Fully autonomous cars are further classified as user-operated or driverless cars. Driverless cars may take much longer to come to market because of regulatory and insurance questions, but it’s safe to speculate that user-operated, fully autonomous cars will come to market within the next five years. (A fully autonomous car can drive from point A to B while encountering the entire range of on-road scenarios without needing any assistance from the driver.)

Companies such as Mercedes, BMW, and Tesla have or will soon release cars with self-driving features. Google currently has an entire fleet of autonomous vehicles, including golf carts and Toyota Priuses that have logged almost 900,000 miles driving in cities, on busy highways, and on mountainous roads around California.

Learned Environment

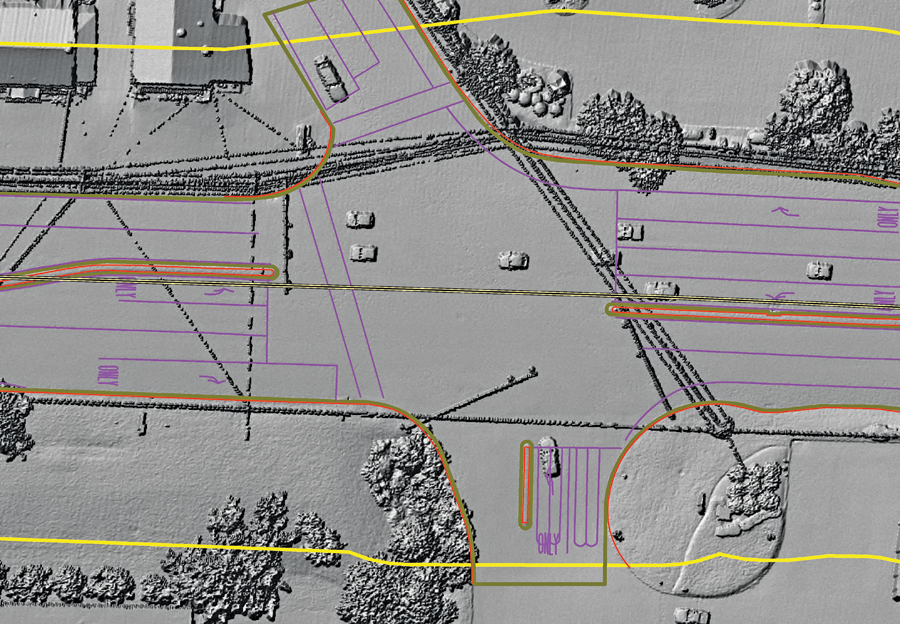

Autonomous vehicles rely on detailed mapping data to properly operate in their environment, which is provided by us, the mapping profession. Different car manufacturers use a different array of technology to make their cars operate autonomously. Many of the autonomous cars currently being developed use lidar, GPS, inertial measurement units (IMU), radar, cameras, and wheel encoders (similar to a distance measurement instrument on a mobile mapping system).

Although these technologies provide most of the necessary information for autonomous cars, it is critical for the car to gather information from the learned environment so that the car can get smarter about driving. Currently, the car learns from the environment by driving a road once and then applying what it learned about that drive the next time it drives the same road.

The autonomous system requires additional information that is commonly available on a handheld GPS or phone GPS, including speed limits, work zones, detours, traffic conditions, and road conditions.

Limitations of Mobile Mapping Alone

Several car companies have earmarked millions of dollars for autonomous vehicle mapping, most of this using mobile mapping technology. This technology consists of using mobile lidar, GPS, IMU, and 360-degree video. The question is: Is this the best technology for the application? Mobile provides a detailed solution, but it relies on extensive control to get the required accuracies for this application.

For autonomous vehicle mapping, the data is divided by sections of roads, and the positional data is subject to GPS gaps or degraded data when the POS solution is processed. This forces the need for extensive survey to get 2-3 centimeters of vertical accuracy consistently. For example, in a 40-mile project, the mobile data would need to be tied to approximately 60 very accurate survey points.

Additionally, in some locations there is extensive permitting required to get access to the right-of-way from the departments of transportation in heavily populated urbanized locations.

Hybrid Approach

What is the alternative? A hybrid approach helps alleviate the need for extensive control and, in most cases, provides a more cost-effective approach to this application.

Hybrid mapping uses a combination of mapping technologies to provide the most viable solution for a given mapping project or data-collection process. The hybrid mapping approach for autonomous vehicle application varies but is similar to what would be used on a positive train control (PTC) project or highway safety project.

For example, airborne lidar, in addition to imagery collected from high-definition mapping sensors (HDMS), provides an accurate, cost-effective approach to limit the interference and potential delays related to collecting extensive control on a right of way. This data, in combination with the mobile data (or, potentially, in the absence of mobile data) should provide the required information.

This approach typically can be more cost-effective than mobile alone. For example, Caltrans requires permits at a significant cost and time lag to get access to their right of way, and typically any activity that requires permitting requires a lane closure company, so HDMS is a viable alternative.

Mobile lidar may still be required for the project, but it can be tied to the HDMS data set. Typical HDMS systems can achieve accuracies of 2 to 3 centimeters vertically and 13 centimeters horizontally, roughly. The required control to achieve this with a mobile sensor is debatable, but it is at a significant cost depending on the approach.

The continuous trajectory solution provided by HDMS provides the necessary basis for an accurate base lidar data set as a reference, with far less required control. The processing of the positional data (IMU, GPS) is absent from the typical brakes in the POS dataset that could be present in a mobile dataset, such as elevation masking, cycle slips, and lock loss.

This doesn’t mean that the solution from a MMS POS solution is bad, because the addition of DMI, second GPS antenna (in most cases), and the algorithms can be used to process the data. There are arguments for all technologies based on who owns what.

I mean to present a potential viable solution to the typical technologies used for this application and to provide options when the collection environment provides additional extensive challenges that would make the project not viable based on time and budget. Once the HDMS data is collected, a transformation can be computed based on tie points between the airborne solution and the mobile solution, yielding an accurate data set.

Relative Accuracy and Data Density

Autonomous vehicle datasets rely on the relative accuracy of the data and the location of the car relative to everything, so the relative accuracy of the hybrid data should be very good. The relative accuracy of mobile lidar data has been proven to be very accurate, in the neighborhood of 8 millimeters with some systems. The relative accuracy of an HDMS system is in the neighborhood of 1 centimeter vertically if calibrated properly.

The data for projects like PTC require good relative accuracy, and the absolute accuracy is much less then you would expect. PTC requires the horizontal accuracy of roughly 7 feet and a vertical accuracy of roughly 3 feet.

Autonomous vehicle mapping requires very good relative and absolute accuracy, much higher than PTC. The absolute accuracy is usually better than 10 centimeters and, as requested by the users out there, is “the best you can achieve for the price.” It would not be expected that a hybrid approach would be able to get “the best you can achieve for the price,” as these guys need thousands and thousands of miles of data to make this work.

Car manufacturers are using a variety of technologies and accuracy requirements for building autonomous vehicle datasets. One thing is clear: it seems that the higher density of the data, the better. It would make sense to say that the higher density of the real-time data, the better the car will operate by itself.

Most data representing the real-time environment surrounding the car is detailed and comprehensive. This begs the question, Does the base information used need to be as detailed as the real-time data? Why or why not? Does the hybrid approach provide the necessary information? Does using a different approach than the almost-universal use of mobile mapping sensors provide enough information?

Some level of database information, such as all aspects of transportation information, is available and is being used in conjunction with the hybrid-gathered data to compile the necessary base information for autonomous vehicles, but is it necessary to have the super-detailed elevation information? Honestly, only the designers of the autonomous cars can answer that.

Right now, a significant number of autonomous vehicle mapping projects are being conducted all over the world, and research would indicate that the requirements vary significantly by car manufacturer. It would be reasonable to state that a dataset of 65 to 150 points per meter—in conjunction with the right additional hybrid data such as 360 video, HD video, and other types of imagery—may suffice for this application.

Also, the thousands of points from mobile lidar provide a very good solution for this application, but is the extensive survey needed? Where is the line for return on investment? At what point does too much data become too much? At what point does the cost of those points drive a solution to be created that gives the user the right amount of data to address all the requirements?

Ironically, the developing autonomous car industry currently relies on the same technology the mapping profession has used for years; they rely heavily on the mapping profession to provide the necessary data and information to make their application work. Only time will tell what approach is best for this application, and as mapping professionals we should look to continue to innovate.